We currently faced the challenge of making a VR game (under NDA, so we can’t mention the game or even provide screenshots or code) for the HTC Vive (Steam VR) and the Gear VR (Oculus – Samsung Galaxy). In this post, we will present both headsets, the challenge they represent from a game design point of view, and also some developer feedbacks!

The Headsets

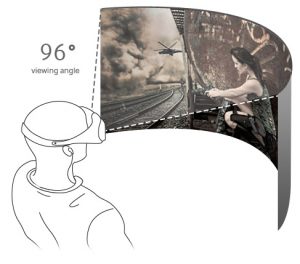

Excluding the Cardboard, Gear and Vive are possibly the two most different VR headset of the actual market:

The Vive is intended to be played on a powerful Computer at 90 FPS, with 2 additional motion controllers, each featuring multiple buttons, all of this in a room scaled experience of at least 1.5 m * 2 m, ideally 3m * 3m.

The Vive is intended to be played on a powerful Computer at 90 FPS, with 2 additional motion controllers, each featuring multiple buttons, all of this in a room scaled experience of at least 1.5 m * 2 m, ideally 3m * 3m.

Gear work on a High End Samsung handled device, feature no controller and don’t detect the player position in space, only the head rotation, speaking of control, the gear detect Swipe, a click and a return button, quite limited compared to the vive possibility.

Gear work on a High End Samsung handled device, feature no controller and don’t detect the player position in space, only the head rotation, speaking of control, the gear detect Swipe, a click and a return button, quite limited compared to the vive possibility.

Objective

We choose Unity as our engine for the project, we already have quite some experience with it and both Gear and Vive have an SDK for Unity, the objective was to offer the best experience for both of the device, so the Vive User can move around the space using room-scale, and pointing object using his controllers while the gear user should not seam restricted in any way in the gameplay.

Developing on Vive and Gear

The first thing to note is than developing for the Vive is actually quite simple as Steam deliver a very easy SDK, with complete prefab and multiple way of getting controller’s input than melt very well in the Unity’s developing logic. Gear development is also very simple in Unity has many of the gear’s functionality are now integrated natively.

Get Vive inputs

private SteamVR_TrackedObject trackedObject;

private SteamVR_Controller.Device device;

void Start () {

trackedObject = transform.parent.GetComponent<SteamVR_TrackedObject>();

}

void Update() {

device = SteamVR_Controller.Input((int)trackedObject.index);

if (device.GetPressDown(SteamVR_Controller.ButtonMask.Trigger))

{

shoot();

}

}

Get Gear inputs

void Update () {

if (Input.GetButtonDown("Fire1")) // get Gear Click

{

shoot();

}

}

Game Mechanics

The game we were ordered was a first person scoring shooter, featuring multiple shooting spot and enemy wave, the kind of things you could find in an arcade game.

To manage the moving differences, we work first on the least restrictive device (the Vive) then porting it on Gear, because finally the gear user could be a vive player than just never move from the center of his room. In this scenario, enemy reacts to the player from 2 perspectives, they react relative to the room’s center position when it comes to approaching the player etc… and when it comes to aim him or run into him, they do it relative to the player position. This gives us some good enemy reactionq toward the player on Vive and Gear. The specificity is in a gear context, they will be no difference from the room center and the player position.

For aiming, we decide to give the player 2 shooting devices, in a vive context they will move with the vive controller and shoot in the direction the controller point at, giving players the opportunity to aim 2 enemies at the same time, but the vive players should manage with a quite long reloading time, so every shoot must be useful.

The gear user still has two shooting devices, but both attach to the camera and aiming where the player look, shooting with one or the other shooting when the player click, so the gear user can’t target at multiple enemy and must look where he want to shoot, but we gave him much faster reloading time (x4), giving him a lot more firepower per second and counter the lack of aiming facility.

So the game features a level with different shooting spot and enemy wave.

When game start on PC, a Vive prefab containing vive camera, UI element, shooting device and room dimension is instantiated at the first shooting spot.

When game start on Samsung, a Gear prefab containing Gear cameras, UI element, 2 much faster shooting device attached to it and a room dimension of 0m * 0m is instantiated at the first shooting spot.

Enemy wave reacts to both room and player position, witch append to be the same on the gear, when all the first spot’s wave are finished, the player and his room are teleported in the next spot until the end of the game.

Development order

Developing first on the gear could appear like a tempting solution, in fact gear has less GPU power, less interaction and all of them are available on the vive, but developing for the gear is a little more complicated because of the need of builds on android, and we can easily forget that a good Vive experience require space to move around and good controller integration. They are generally totally skipped on many games developed for Oculus and then ported to the Vive, when it’s not worse, in some game like InCell room-scale just enable the player to just walk away of the game area!

So a good approach is to develop for the Vive for quick test time and a complete experience (keeping in mind game performance for the Gear) and asking yourself: is my game still work / is fun if I don’t move around?

Complex Vive Controller interaction is also out of the question or should have a much simpler alternative for Gear user (a click or a swap).

Conclusion

To conclude making a little game on Vive and Gear is quite simple, but the developer should be aware of all the VR limitation (motion sickness etc …), moreover if nearly ever Gear function can be ported on vive, the opposite is not true, as Gear can’t detect player position, and don’t have hand controllers.

Coding for the most complex scenario (moving controllers and player, room scale etc …) then simplifying it is generally a good way to go.

Finally, make regular build on both targeted devices is necessary due to the huge power disparity between a computer and a handled device.